Next generation reservoir computing

Gauthier, D. J., Bollt, E., Griffith, A., & Barbosa, W. A. (2021). Next generation reservoir computing. Nature communications, 12(1), 1-8.

- best for processing information generated by dynamical systems using observed time-series data( Even when systems display chaotic3 or complex spatiotemporal behaviors )

- training only happened in output

- small training data sets + high efficient

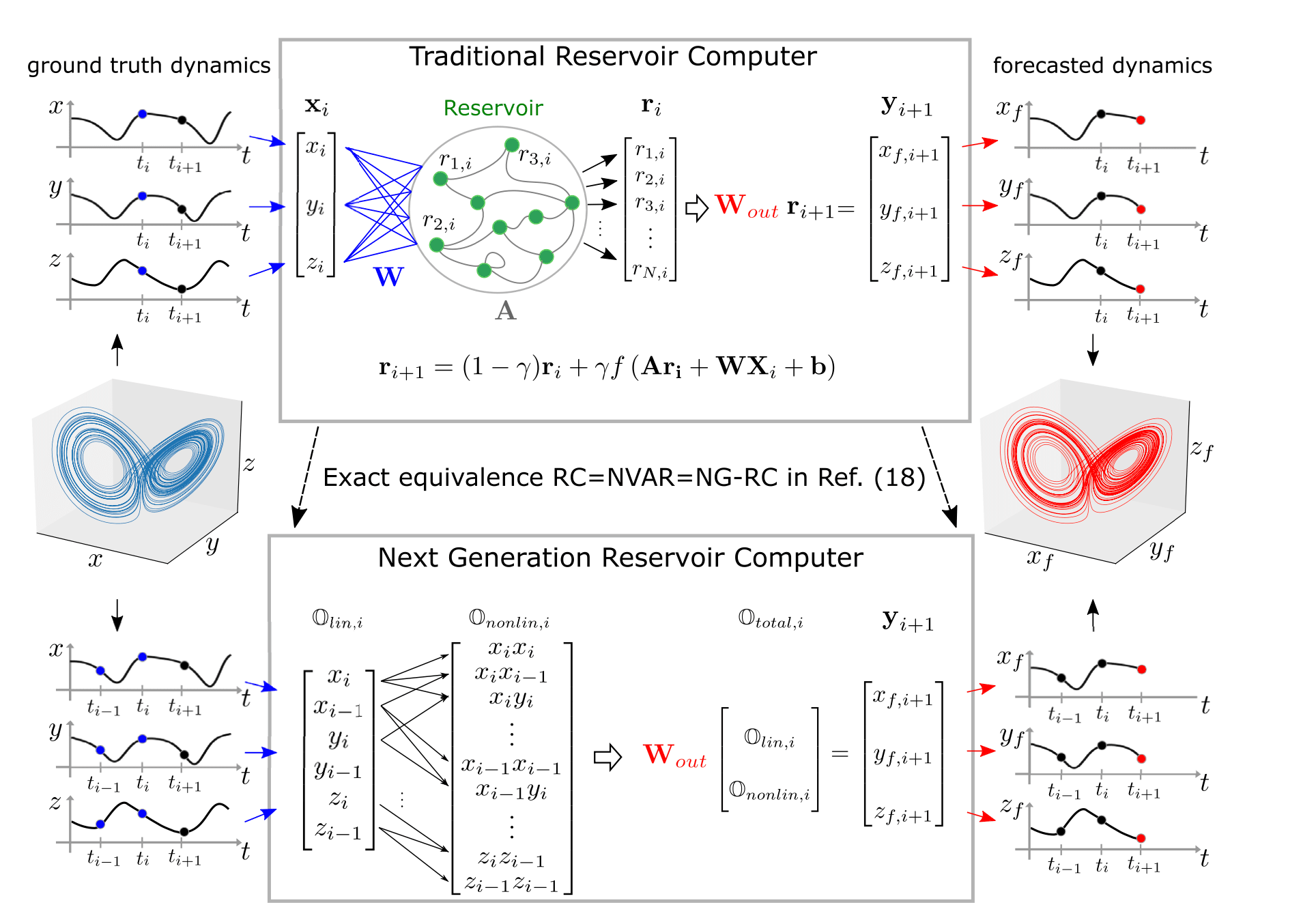

nonlinear vector autoregression (NVAR) machine = NG-RC

- no reservoir is required

- A traditional RC is implicit in an NG-RC

Traditional RC

The purpose of an RC is to broadcast input data X into the higher-dimensional reservoir network composed of N interconnected nodes and then to combine the resulting reservoir state into an output Y

- nonlinear activation function e.g.

- or linear but feature vector became nonlinear

NG-RC

- a dramatically shorter warm-up period in comparison to traditional RCs

terminology

梯度消失问题 (Vanishing gradient problem)

出现在以梯度下降法和反向传播训练人工神经网络的时候。在每次训练的迭代中,神经网路权重的更新值与误差函数的偏导数成比例,然而在某些情况下,梯度值会几乎消失,使得权重无法得到有效更新,甚至神经网络可能完全无法继续训练。举个例子来说明问题起因,一个传统的激励函数如双曲正切函数,其梯度值在 (-1, 1)范围内,反向传播以链式法则来计算梯度。

这样做的效果,相当于在n层网络中,将n个这些小数字相乘来计算“前端”层的梯度,这就使梯度(误差信号)随着n呈指数递减,导致前端层的训练非常缓慢。